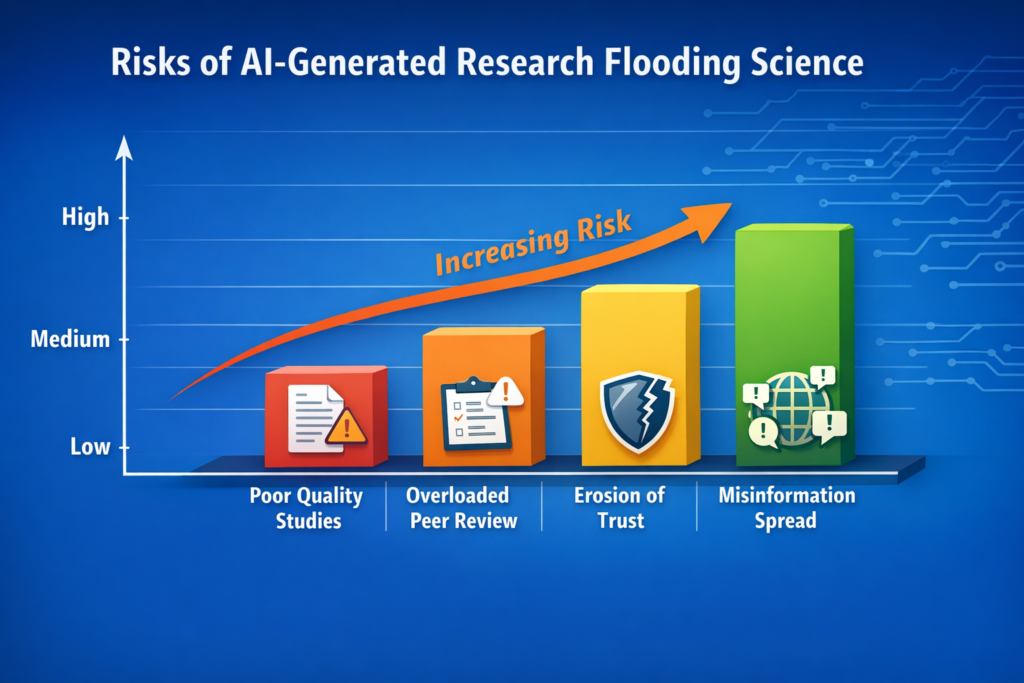

Artificial intelligence has already reshaped how research is conducted, from speeding up data analysis to helping scientists draft papers more efficiently. But as AI tools become more powerful and accessible, concerns are growing about unintended consequences. A new generation of AI systems can now generate full academic papers in minutes, complete with citations, charts, and formal language. While this capability may sound like a breakthrough, many researchers fear it could undermine the integrity of scientific publishing. At the center of the debate is the risk that AI-generated research could overwhelm journals with poorly designed, misleading, or outright flawed studies. Scientists and journal editors warn that the rapid rise of such tools may blur the line between genuine scientific inquiry and automated content production, making it harder to distinguish meaningful research from noise.

Scientists warn a new AI tool could flood research with low-quality studies by dramatically lowering the barrier to producing academic papers. What once required months or years of careful experimentation and peer collaboration can now be mimicked in hours using automated systems trained on vast libraries of existing research. While these tools do not conduct experiments or generate new knowledge, they can convincingly imitate the structure and tone of real science, which is where the concern lies. Researchers worry that this surge of AI-written papers could strain an already overloaded peer-review system. Journals may struggle to filter out low-quality submissions, and valuable scientific findings could be buried under a growing pile of superficial or misleading studies. The concern is not just about volume, but about trust—trust in published research, in citations, and in the scientific record itself.

Table of Contents

New AI Tool Could Flood Research with Low-Quality Studies

| Key Aspect | Details |

|---|---|

| Main Concern | AI tools can mass-produce research papers without real experiments |

| Who Is Alarmed | Scientists, journal editors, and research integrity experts |

| Core Risk | Flooding journals with low-quality or misleading studies |

| Peer Review Impact | Increased workload and higher risk of flawed papers slipping through |

| Long-Term Effect | Erosion of trust in scientific literature |

| Suggested Response | Stronger review standards and AI-detection safeguards |

The warning that a new AI tool could flood research with low-quality studies is not a rejection of innovation, but a call for caution. Science depends on trust, rigor, and transparency values that cannot be automated. As AI tools continue to evolve, the responsibility lies with researchers, publishers, and institutions to protect the integrity of the scientific record. Handled carefully, AI can remain a useful ally in research. Handled recklessly, it risks turning scientific publishing into a numbers game, where volume outweighs value. The choices made now will shape the credibility of research for years to come.

How the AI Tool Works

- The AI tools raising concern are designed to generate academic-style text by analyzing millions of existing research papers. They can replicate the language, formatting, and citation patterns common in scholarly writing. Some tools even create fictional datasets or simulate results that appear statistically sound at first glance.

- While these systems are often marketed as “research assistants,” critics argue that they go far beyond assisting. By producing complete manuscripts, they allow users to bypass the core scientific process—forming hypotheses, collecting data, testing results, and refining conclusions. The output may look legitimate, but it lacks the substance that real science requires.

The Growing Pressure on Peer Review

- Peer review is already under stress. Editors frequently report difficulty finding qualified reviewers, and reviewers themselves are stretched thin by growing submission volumes. The introduction of AI-generated papers could make this problem worse.

- Reviewers are trained to assess methodology, logic, and relevance not to detect whether a paper was written by a machine. Subtle errors, fabricated references, or overly generic conclusions may escape notice, especially when reviewers are working under time pressure. Scientists warn that the system could be gamed, allowing weak or unreliable studies to enter the scientific record.

Risks to Research Quality and Credibility

One of the most serious concerns is how low-quality AI-generated studies could contaminate future research. Scientists rely heavily on published work when designing experiments, conducting reviews, or building theoretical models. If flawed studies are cited and reused, errors can multiply across fields. This is especially dangerous in sensitive areas such as medicine, climate science, and public health. In these fields, inaccurate findings can influence policy decisions, clinical practices, or public understanding. Scientists fear that even a small number of AI-generated studies slipping through could have outsized consequences.

Incentives Driving the Problem

The academic system itself may unintentionally encourage misuse of AI tools. Many researchers face intense pressure to publish frequently to secure funding, promotions, or tenure. In such an environment, tools that promise faster publication can be tempting. Early-career researchers and scholars in underfunded institutions may feel especially vulnerable. Without proper guidance or oversight, AI tools could be used to pad publication records rather than advance knowledge. Scientists emphasize that the issue is not malicious intent alone, but structural incentives that reward quantity over quality.

Detection and Regulation Challenges

Detecting AI-generated research is not straightforward. Unlike plagiarism, which involves copying existing text, AI-generated content is often original in form, even if derivative in substance. Traditional plagiarism software is poorly equipped to flag such material. Some publishers are experimenting with AI-detection tools, but these systems are imperfect and can produce false positives. There is also concern about fairness legitimate researchers who use AI for editing or language support may be unfairly scrutinized. Scientists argue that clear guidelines are needed to distinguish acceptable assistance from unethical automation.

Calls for Responsible Use of AI

- Despite the warnings, most scientists do not advocate banning AI from research altogether. Instead, they call for responsible and transparent use. AI can be valuable for tasks such as data organization, language editing, and literature searches when used appropriately.

- Experts suggest that journals require authors to disclose how AI tools were used in the research and writing process. Others propose stricter methodological checks and more rigorous data verification during peer review. Education also plays a key role training researcher to understand both the power and the limits of AI.

The Future of Scientific Publishing

The debate over AI-generated research highlights a broader question about the future of science in a digital age. Technology has always shaped how research is done, but rarely has it challenged the boundary between genuine discovery and automated imitation so directly. Scientists warn that without proactive measures, the research ecosystem could be overwhelmed by content that looks scientific but lacks real insight. At the same time, thoughtful regulation and cultural shifts within academia could ensure that AI strengthens, rather than weakens, scientific progress.

FAQs on New AI Tool Could Flood Research with Low-Quality Studies

What is the new AI tool scientists are warning about?

The concern is not limited to one single tool, but to a growing class of AI systems capable of generating full academic papers.

Why do scientists believe AI could flood research with low-quality studies?

Because AI can produce research-style papers quickly and at scale, it lowers the effort needed to submit studies to journals.

Does AI-generated research always contain false information?

Not always, but the risk is high. AI systems generate text based on patterns in existing research, not on real-world experiments or verification.

How could this effect peer-reviewed journals?

Peer-reviewed journals may become overwhelmed with submissions, making it harder for reviewers to carefully evaluate each paper.