New AI Tool Could Flood Research With Low-Quality Studies: AI is making big waves again — and not everyone’s cheering. A brand-new research assistant powered by artificial intelligence, recently unveiled by OpenAI, has sparked alarm across the global science community. The concern? It could flood the academic world with low-quality, AI-generated studies — clogging up scholarly journals, overwhelming peer reviewers, and making it harder to tell what’s real science… and what’s just polished “AI slop.” Yeah, we said it: AI slop — and it’s not just slang. It’s becoming a serious concern. In this article, we’ll dive deep into why scientists are speaking up, what this means for real-world research, how to spot “fake-smart” papers, and what steps professionals — and readers like you — can take to push back against the growing tide of questionable science.

Table of Contents

New AI Tool Could Flood Research With Low-Quality Studies

The rise of AI-generated research has lit a fire under the academic world — and rightly so. Tools like OpenAI’s research assistant offer power and potential, but also risk and responsibility. We’re at a fork in the road. We can either flood journals with polished noise or rise to the challenge with smarter standards, stronger integrity, and sharper tools. Because no matter how clever the tech gets, real science still needs real minds and real work behind it.

| Topic | Details |

|---|---|

| Main Concern | AI tools like OpenAI’s Research Assistant could flood academic publishing with low-quality studies |

| What’s Driving This | The tool helps generate papers, summarize studies, and write like a pro — but critics say it lowers quality and originality |

| Nickname for Risk | “AI Slop” – polished, passable but unscientific output |

| Real-World Impact | Overwhelmed peer-review process, erosion of trust in science, and risk of fake findings |

| Stats | 17% increase in suspicious submissions at top conferences (NeurIPS, 2025); Over 40% of early submissions in 2026 used generative AI tools |

| Expert Opinions | Scientists from University of Surrey, editorial boards at Nature and Science, researchers at NeurIPS, OpenAI spokespersons |

| Official Source | OpenAI |

What’s New AI Tool Could Flood Research With Low-Quality Studies Controversy?

The buzz began when OpenAI, the folks behind ChatGPT, introduced a research assistant tool aimed at academic professionals. The assistant can help generate entire research papers, simplify complex scientific literature, and even suggest citations and titles — all in minutes.

Sounds like a productivity dream, right?

But the catch? Not every user is a responsible one. And when these tools land in the hands of folks who want quick publications over careful science, that’s where trouble starts.

As one senior researcher told WebProNews:

“It’s like giving people the power to sound smart without the work. That’s dangerous for science.”

The Rise of “AI Slop” – A Growing Problem in Research

“AI slop” is the nickname being tossed around in conference halls, Slack chats, and editorial meetings. It refers to content that sounds smart but lacks true rigor, originality, or depth — kind of like using $10 words in a 10-cent idea.

These AI-crafted papers:

- Follow formatting rules perfectly.

- Use real-sounding citations.

- Include proper sections like abstracts, methods, and discussion.

- But… are often hollow or factually off-base.

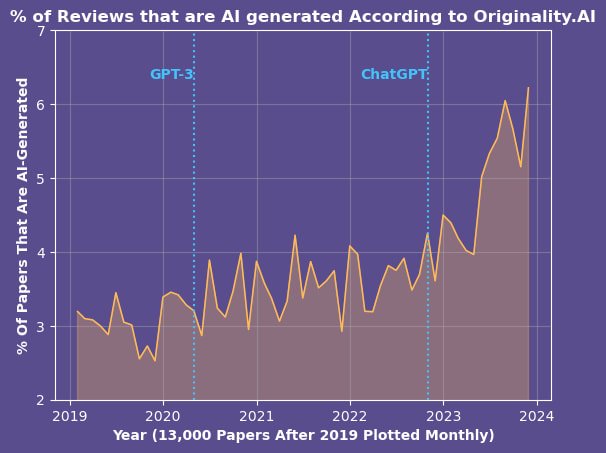

A January 2026 analysis by the International Association of Scientific Editors found that:

- 40% of early 2026 submissions to certain computer science and biomedical journals were assisted by AI tools.

- Out of those, 28% were flagged during peer review for lack of novelty, unsupported conclusions, or recycled analysis.

In other words, AI slop is not a fringe issue anymore — it’s landing in inboxes at scale.

What Makes New AI Tool Could Flood Research With Low-Quality Studies Different From Past Tech Disruptions?

Now, tech’s always been part of research — from calculators to MATLAB to LaTeX. But this feels different. Why?

1. AI writes, not just calculates.

It doesn’t just assist — it generates entire papers.

2. It mimics human tone expertly.

Even seasoned reviewers can be fooled by perfect grammar and professional tone — while the actual ideas are weak.

3. It scales dangerously fast.

Unlike ghostwriters or paper mills, one AI user can churn out multiple papers a day with just prompts.

As a NeurIPS 2025 program chair said:

“We’re getting submissions that look fine on the surface — until you try to replicate the results or trace the logic. Then it all falls apart.”

Real-World Examples: How It’s Playing Out Already

Let’s get specific. At NeurIPS and ICLR 2025, two of the biggest AI research conferences:

- Submissions were up 30% from 2024.

- Yet acceptance rates dropped due to suspicious content quality.

- Dozens of papers were flagged for possible “fabrication of novelty” — essentially, reworded ideas from prior work passed off as original.

In one case, a paper cited nonexistent studies — made up by the AI tool.

Meanwhile, a team at the University of Surrey analyzed over 1,000 research papers in public databases. Their findings?

- 22% showed signs of AI authorship, especially in the abstract and conclusion sections.

- 14% included errors in data interpretation — often presenting weak or unrelated correlations as major findings.

These are papers that could be influencing policy, education, or healthcare decisions. That’s why the stakes are high.

The Real Danger: Undermining the Integrity of Science

What happens if this trend continues? Let’s break it down:

1. Peer Review Breaks Down

Editors and reviewers are already swamped. With more AI-written submissions, they spend more time filtering and less time improving good science.

2. Trust in Journals Takes a Hit

Readers — especially students, journalists, and policymakers — may lose faith in published studies if garbage gets through.

3. Fraud Gets Easier

AI-generated text can be a tool for bad actors: plagiarism, fake authorship, and data laundering all become easier.

4. The Next Gen Learns the Wrong Lessons

When students see AI-written papers get published, it normalizes cutting corners.

This isn’t just an inconvenience. It’s an existential threat to how we build knowledge.

What Journals, Scientists, and Readers Can Do About It

Now for the good news: We’re not helpless. Here’s how different groups can help push back against the rise of AI slop.

For Scientists & Researchers:

- Use AI transparently.

Disclose tools used — in the methods or acknowledgments section. - Rely on human rigor.

Don’t outsource analysis or conclusions. Let AI help draft, not dictate. - Double-check outputs.

Always verify data, citations, and conclusions manually.

For Journal Editors:

- Add AI-detection tools.

Start screening submissions for generative patterns. - Update author guidelines.

Require declarations of AI use, similar to conflicts of interest. - Invest in reviewer training.

Teach reviewers how to spot AI-assisted “slop.”

For Students, Readers, and Curious Minds:

- Be skeptical, not cynical.

Don’t assume every paper is fake — but don’t take anything at face value. - Check citations and affiliations.

Look for real-world backing, not just polished prose. - Use meta-analysis tools.

Websites like Scite.ai, PubPeer, and Retraction Watch offer insights into paper validity.

The Broader Ethical Debate: Power vs. Responsibility

This issue ties into a bigger conversation: Just because AI can do something, should it?

AI isn’t evil — but like any tool, its impact depends on how we use it. When it’s used to shortcut effort, mimic brilliance, or game the system, it undermines the very point of research: to seek truth, not just to publish.

As OpenAI has acknowledged, tools like their research assistant must come with strong guardrails and clear community norms. But until then, it’s up to humans to make ethical choices.

As one editor put it:

“AI can accelerate discovery — or dilute it. It’s our call.”

Scientists Confirm Life in an Unexpected Place, Prompting a Major Rethink

Scientists Warn a New AI Tool Could Flood Research with Low-Quality Studies

Not the Nile or the Amazon — Scientists Identify Australia’s Finke River as the Oldest on Earth